Over the years many webscale companies have designed massivley scalable and highly available services using loadbalancer solutions based on commodity Linux servers. Traditional middleboxes are completely replaced with software loadbalancers. In this blog we will see common building blocks across Microsoft’s Ananta, Google’s Maglev, Facebook’s Shiv, Github GLB and Yahoo L3 DSR. We will see how Kube-router has implemented some of these building blocks for Kubernetes, and how you can leverage them to build a highly-available and scalable ingress in bare-metal deployments.

Network Desgin

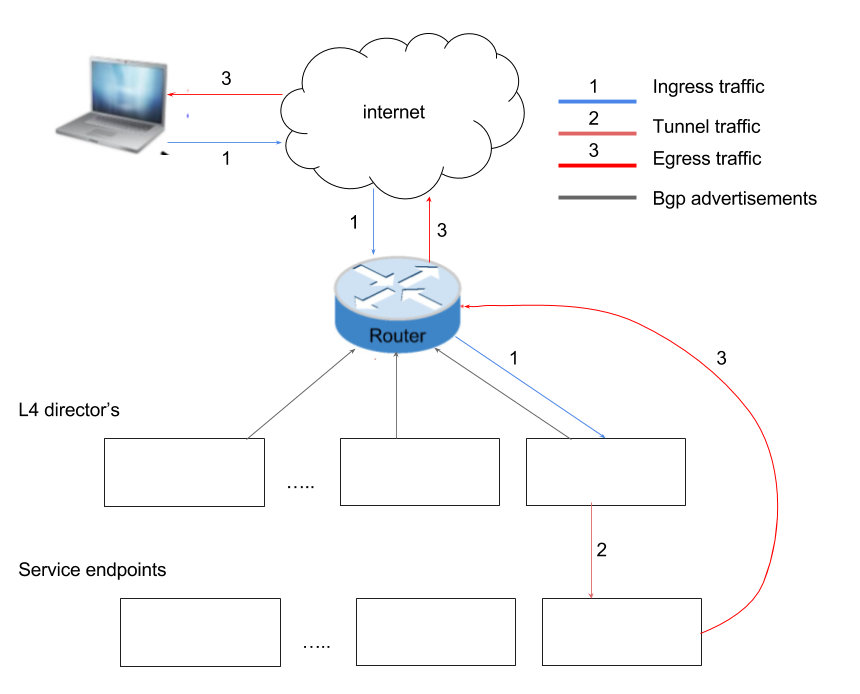

Below figure shows typical tiered architecture used in the solutions used by web-scale companies.

Below are some of the standard mechanisms used.

Use of BGP + ECMP

You have second tier fleet of L4 directors, each of which is a BGP speaker and advertising service VIP to the BGP router. Routers have equal cost multiple paths to the VIP through the L4 directors. Running the BGP protocol on the L4 director provides automatic failure detection and recovery. If a L4 director fails or shuts down unexpectedly, the router detects this failure via the BGP protocol and automatically stops sending traffic to that L4 director. Similarly, when the L4 director comes up, it can start announcing the routes and the router will start forwarding traffic to it.

L3/L4 network load balancing

Since router has multiple paths to advertised vip, it can perform ECMP load balancing. In case router does L3 does balancing, router distributes the traffic across the tier-2 L4 directors. Router can also do hash (on packets source, destination ip and port etc) based load balancing. Where traffic corresponding to a same flow always gets forwarded to same L4 director. Even if there are more than one router (for redundancy) even then traffic can get forwarded to same L4 director by both the routers if consistent hashing is used.

L4 director

A L4 director does not proxy the connection but simply forwards the packets to selected endpoint. So L4 director is stateless. But they can use ECMP to shard traffic using consistent hashing so that, each L4 director selects same endpoint for a particular flow. So even if a L4 director goes down traffic still ends up at the same endpoint. Linux’s LVS/IPVS is commanly used as L4 director.

Direct server return

In typical load balancer acting as proxy, packets are DNAT’ed to real server IP. Return traffic must go through the same loadbalancer so that packets gets SNAT’ed (to VIP as source IP). This hinders scale-out approach particularly when routers are sharding traffic across the L4 directors. To overcome the limitation, as mentioned above L4 director simply forward the packet. It also does tunnel the packets so that original packet is delivered to the service point as is. Various solution are available (IPVS/LVS DR mode, use of GRE/IPIP tunnels etc) to send the traffic to endpoint. Since endpoint when it receives the packets, it sees the traffic destined to the VIP (ofcourse endoint needs to be setup to accept traffic to VIP) from the original client. Return traffic is directly sent to the client.

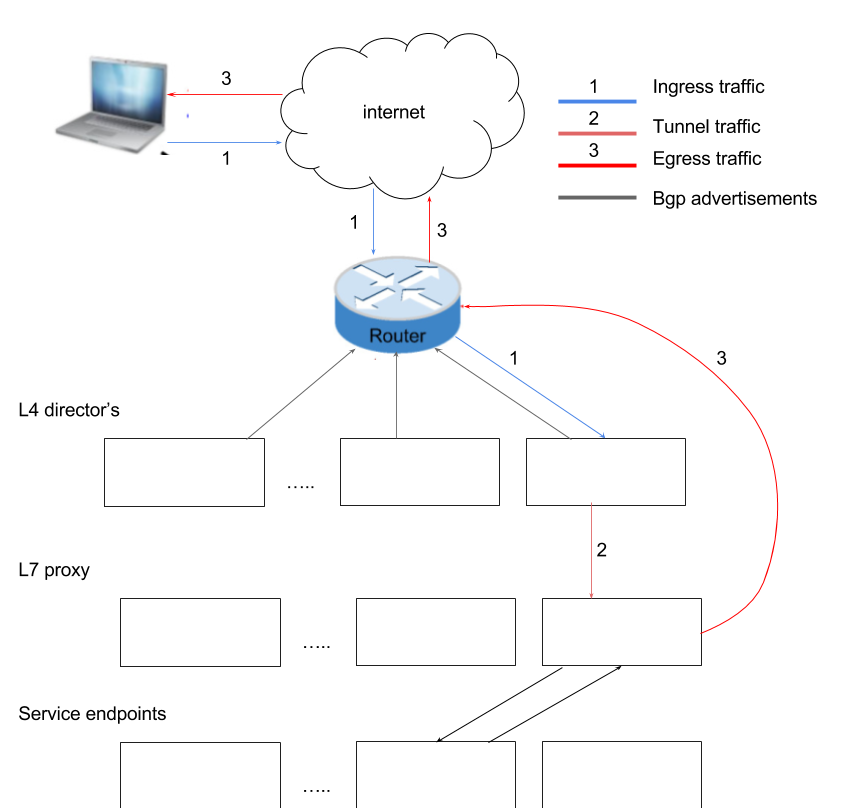

L4/L7 split design

Above basic mechanisms can be extended to implement application load balancing. Whats is called L4/L7 split design as shown below.

How does this apply to Kubernetes?

Kubernetes provides multiple ways to expose service: NodePort, ClusterIP and external IP etc. These underlying mechanisms can be hooked in to cloud load balancers (like ELB/ALB on AWS) when cluster is running on public cloud so some of the problems are solved readily for you. When it comes to bare-metal deployment, exposing services to outside cluster is particularly challenging. You can solve the problem is multiple ways. Kube-router already has the ability to advertise cluster IP and external IP’s to configured BGP peers, so that services can be exposed easily outside the cluster. Kube-router also has the support to setup direct server return using LVS/IPVS tunnel mode. With building blocks provided by the Kube-router, you can design the services to be exposed outside the cluster in the same manner as above mentioned web scale comapnies.

Demo

Please watch below demo to see how kube-router converts each cluster node into a L4 director built on top of IPVS/LVS. Each node through kube-router also advertises service external IP to configured BGP router. In the demo a standard Linux running Quagga is used as router. Linux’s native flow-based ECMP load balancing is used for the demo.